Authentication & Authorization in a Microservices Architecture (Part 2)

IT Tips & Insights: In part two of this three-part series, a Softensity Engineer details how to design authentication in microservices.

By Gustavo Regal, Software Engineer

Your BFF should guarantee requests coming to your microservices are authenticated;

But do enforce authentication at the pod level using a sidecar approach, which provides benefits on security, performance and availability.

Authentication in Microservices Architecture

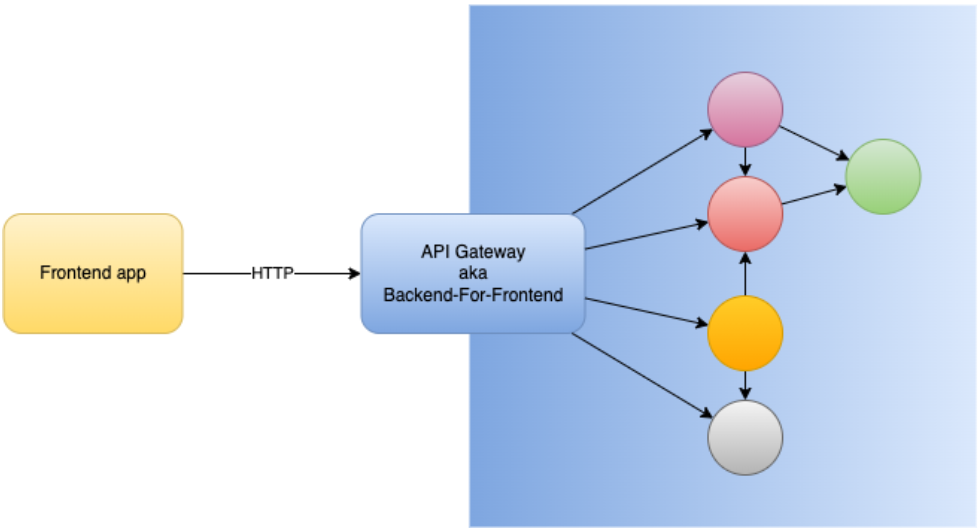

In Part 1 we took a look at how authentication and authorization look in a monolith. Now let’s take the red pill and see how the world looks on the other side. When you have a microservices architecture, you may have one single frontend app, but in the cloud you might have dozens or hundreds of services, each one probably with its own database, like below:

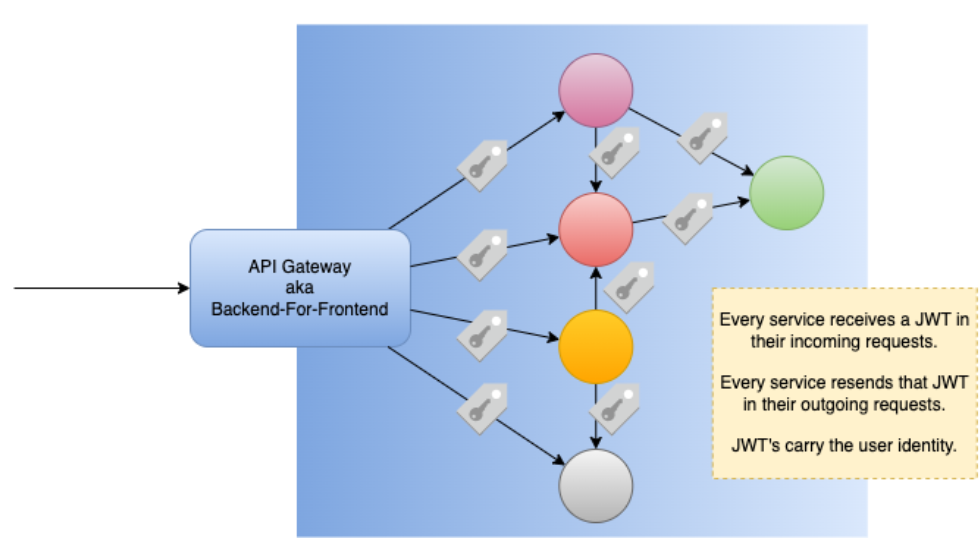

The BFF and Authentication at the Edge

The first thing to notice in the figure above is the presence of an API Gateway (aka BFF) in the center, serving as a bridge between the outer world (where the client frontend app resides) and the inner world (where all the microservices reside). That is a common topology we find today. You can see that no request from the outer world reaches the upstream services directly. They only get there when routed by the BFF. It’s like a private network. A network in which microservices can talk among themselves and receive communication from the BFF, but not from the internet.

There are many reasons why people model solutions this way, and authentication is one of them. When you look at that figure and remember the S in SOLID (Single-Responsibility Principle, aka SRP), you might think: “I don’t want each and every service to be concerned with authentication.” Neither would the user appreciate having to login to several services independently.

One possible solution: make the BFF be in charge of making sure every request is authenticated before it sends them over to any of the upstream services. One simple way to do that is the following:

Step 1:

Everytime the BFF is requested, it checks for the presence of an access token. If it is present and valid, then the BFF lets it be processed and/or forwarded upwards. If the token is not present or not valid, then the BFF rejects it with a 401 status.

Step 2:

One of your microservices is a Users Service, which stores user data, such as usernames, passwords, emails, etc.

Step 3:

In your frontend, you have a login form that is launched every time a 401 status happens. That form posts credentials to the BFF’s /sign-in endpoint, which interacts with the Users Service to make sure those credentials are valid and issue the user an access token or a session token.

In the topology described above, the BFF is acting as an authentication service, issuing tokens when a user provides valid credentials.

Authentication service: a service that applications use to authenticate the credentials, usually account names and passwords, of their users. When a client submits a valid set of credentials, it receives a cryptographic ticket that it can subsequently use to access various services. (Source)

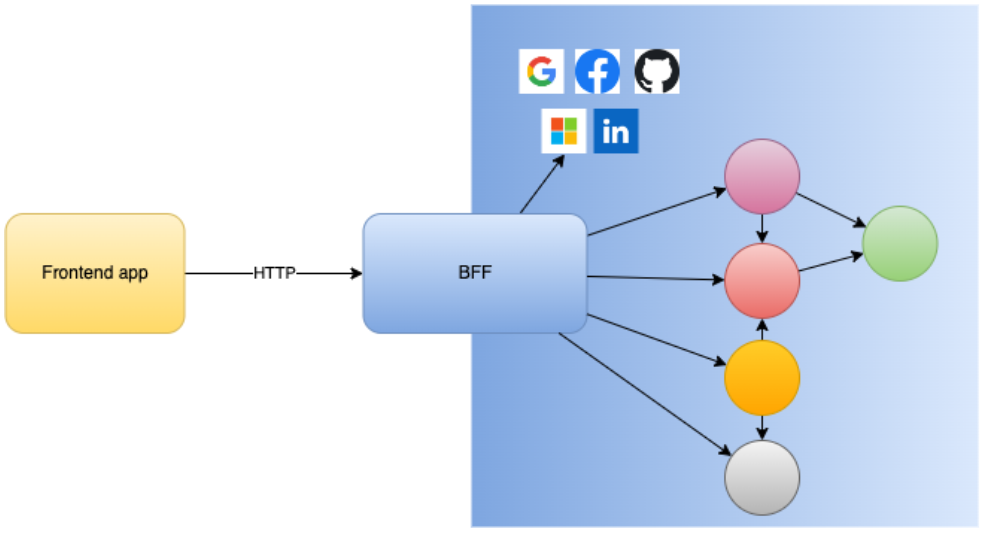

Identity Providers

Another useful implementation is to make use of Identity Providers, such as Google, Facebook, Microsoft, GitHub and others. As many users already have an account setup in those platforms, your application can integrate with them, which would provide the solution some advantages:

- The users won’t have to create and memorize a new password for your system.

- You may not need to implement that part on your system (login form, credentials authentication, token generation, user registration forms …).

- The users can have a single sign-on experience, i.e if they are already logged in at Gmail, for example, they can just open a new browser tab and open your web app, where they will be automatically logged in as well.

Considerations

Either with an complete in-house solution or with the use of public identity providers, the approach described so far focuses on making sure the requests are authenticated at the edge, before they enter the microservices realm. But what happens at the microservice level?

One easy thought here is:

“That private network is reliable in terms of authentication. If I am a microservice and I have received a request, I can just TRUST. I don’t need to verify access tokens, etc., because the BFF did it for me.”

This approach is coherent with the SRP (Single-Responsibility Principle), but does have some drawbacks:

- Bottleneck: every request that comes for every service is going to be verified for authentication at the BFF level, i.e that workload is not going to be distributed.

- SPOF (Single Point Of Failure): if there is any problem with the authentication feature in the BFF, then there is a problem with authentication in the whole system.

- Security: security advocates will tell you to never rely on a single security layer; instead you should overlap different security measures.

- Check out Zero Trust Architecture; the name speaks for itself.

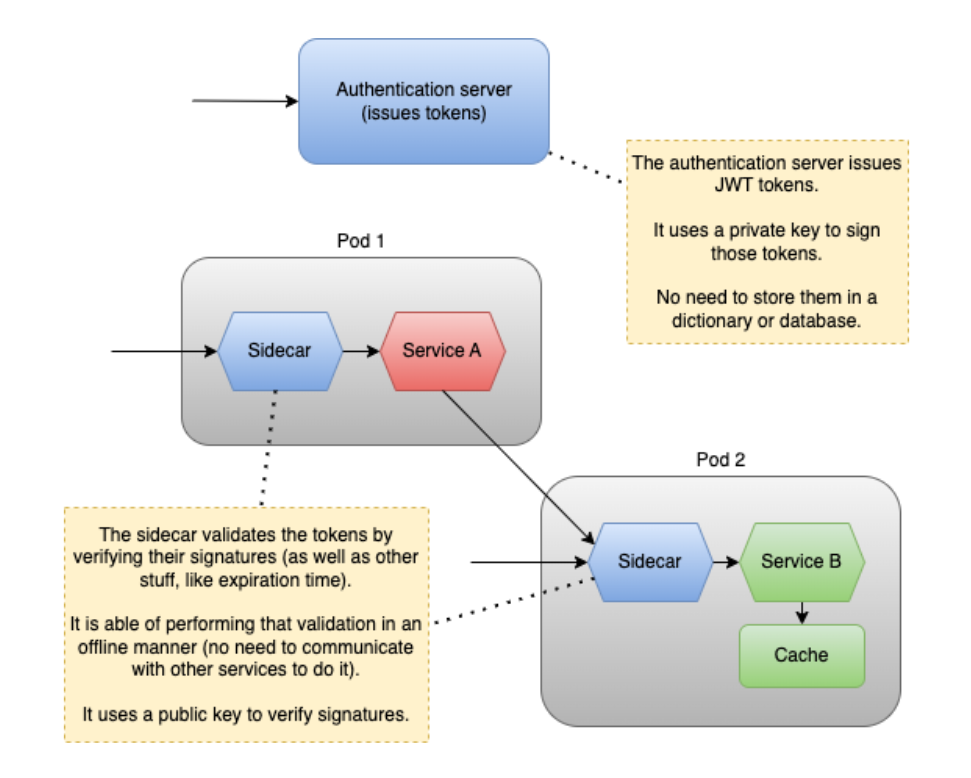

Pod Architecture to the Rescue

If the services shouldn’t be relying on the BFF for token validation, then they should validate it themselves. But then you would have to repeat the token validation logic in every service implementation, violating the SRP and saying goodbye to the productivity that was promised to you when you accepted entering the microservices world. What to do, then?

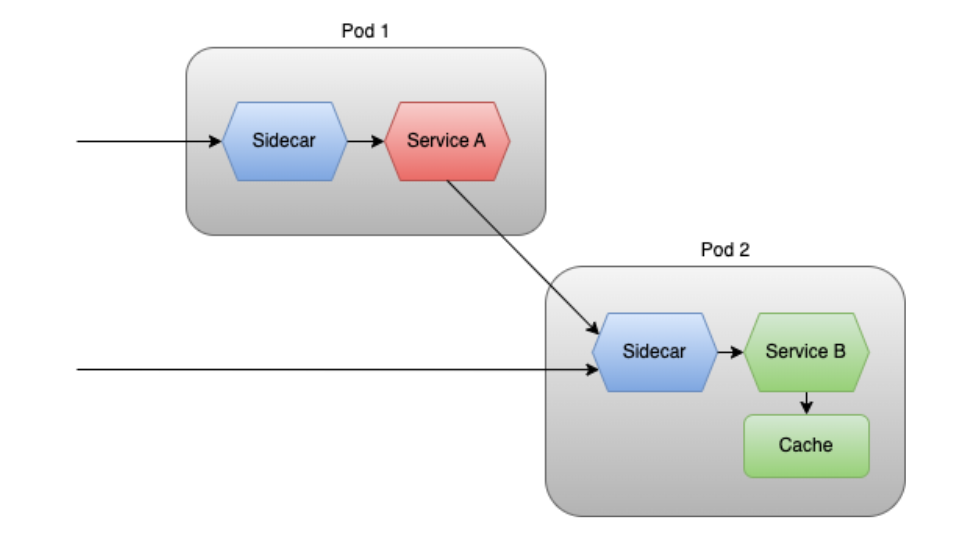

Well, for people running their services in containers, and their containers in pods (as in Kubernetes), there is a nice trick: sidecars!

Sidecars look like this:

Your service is the motorbike. The sidecar is the sidecar.

They also look like this:

Here you can see the following:

- 2 pods, each one running a different service container plus a sidecar container.

- Requests ingressing the pod are only allowed to hit the sidecar, which acts as a proxy.

- Communication with the services is only done through the sidecar.

- Both pods use different instances of the same blue sidecar implementation.

That means one can implement the token validation logic in a service called “authentication sidecar.” That blue service is going to be owned by only one team, but it is going to be used in every teams’ pods.

By doing that, you are once again compliant to the SRP, as well as letting your services be lean and focused on their own singular concerns. Also, you are distributing the token verification workload throughout the pods and implementing a zero trust architecture.

But how are tokens verified?

So far in this article we haven’t specified any details about the access tokens. For all intents and purposes until this point, they could be opaque tokens or structured (transparent) tokens.

Let’s clarify:

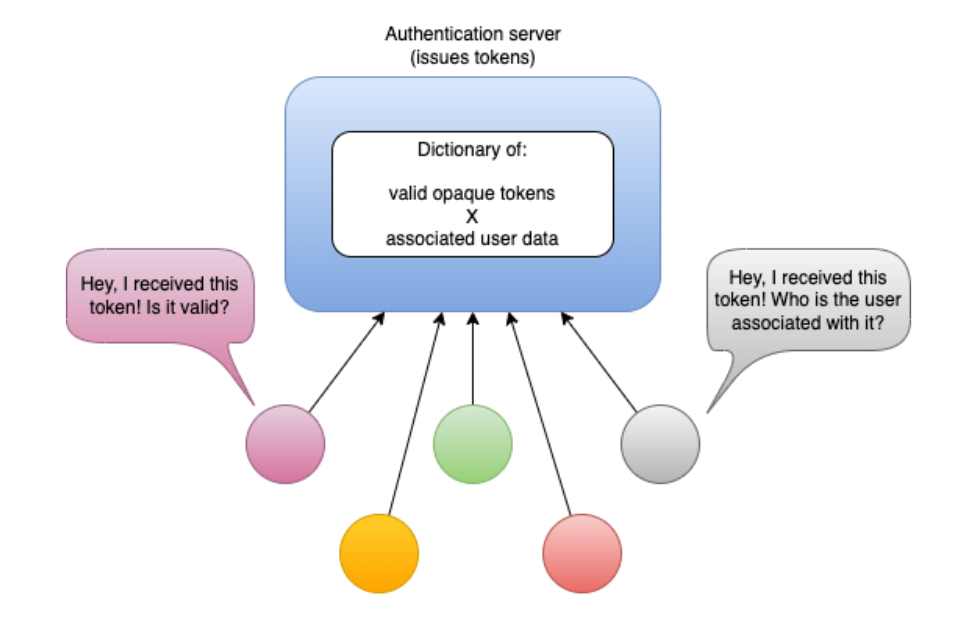

- Opaque token: A token that, for you, is just a huge random string; it doesn’t carry any meaning except that it magically grants you access to whatever resource you authenticated for. Session id cookies normally fall on this spectrum; servers have secure information associated with that id/token somewhere.

- Structured (transparent) token: A kind of token that you or anyone else can read. It has data written in it. The most famous structured token nowadays is certainly JWT.

All of this means that only the issuer of opaque tokens knows if they are valid and what user data is associated with it. Therefore, if your architecture relies on opaque tokens, then how will the services (or sidecars) validate them? It seems like they will have to ask the token issuer:

Too bad … that would result once again in a bottleneck, SPOF and all that. Your solution would perform poorly and would not be cost effective, besides showing a lower availability.

That is just one of the reasons why JWTs have become so popular. They can be parsed and validated by anyone who has a key/secret, in an offline manner.

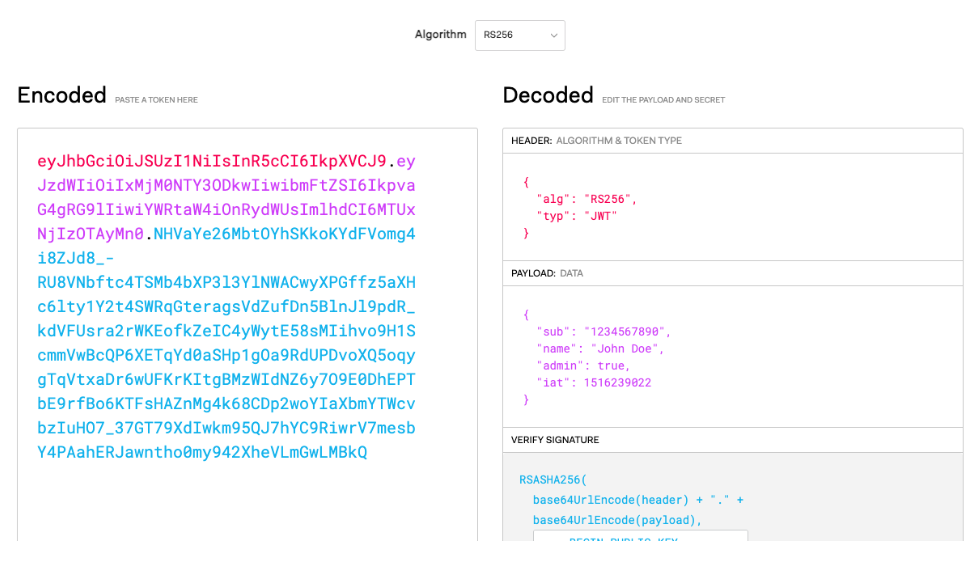

Just enough JWT

A JSON Web Token is just a token whose data is structured in a JSON format.

It features:

- A header: which makes it clear that this is a JWT. It also declares what type of algorithm was used to sign that token.

- A payload: that contains information on the principal (the user, for simplicity), on the token itself (like when it was issued, when it will expire …) and whatever else you wish to add.

- A signature: very important part! Generated from the combination of the header, the payload and a secret. This prevents the token from being tampered with, so anyone who verifies the signature is valid knows they can trust the token.

After you have those parts, just encode them 64, concat it all with dots as separator and there you have your JWT, ready to transit.

As for the signature, there is a particular method called RSA256, which is based on an asymmetric algorithm. This is particularly interesting because one key (the private key) is going to be used to sign tokens, and another key (a public key) is going to be used to verify that signature. That means only the authentication service is going to possess the private key, while several public keys related to that private key can be generated and distributed to whoever wants to be verifying tokens.

Here is a JWT at jwt.io, in which a user is identified by the claim sub, with value 123456789 (that’s just the user’s id in the system):

Therefore…

If our microservices share JWTs everytime they communicate, specifically JWTs carrying the identity of the user, such as the ones in OpenID Connect, then the user is always known.

With all that in mind, the following might be a good solution for you (as it has been for many):

The services themselves are spared from implementing token validation logic, because the sidecar does it for them:

Also, as the user is always known to the services, they can easily generate logs, audits, etc.

Fortunately, this kind of problem has already been solved by the community. For example, check out Envoy. A sidecar running Envoy can be configured to act as a proxy, validating JWTs for authentication, using configuration you provide to it, like the public key to use, and other validations settings.

In this Part 2 we focused on how to design authentication in microservices. Check out Part 3 for an overview of authorization.

About

Hey, I’m Gustavo Regal. I live in Porto Alegre (Brazil) and I’ve been a software engineer and a few more things since 2007, during which time I have designed and developed lots of different kinds of systems for all sorts of industries.

I am currently working as a tech lead and architect at Softensity, building distributed solutions using Azure, C# and other software.

Thank you for taking the time to read this blog. I would love to get your feedback and contributions.

Join Our Team